Implementing HTTP/2 with Zero Downtime: A Blue-Green Deployment Case Study

Summary

This case study demonstrates how we implemented HTTP/2 on production Apache servers with zero downtime using a blue-green deployment strategy. When we discovered that our vendor-hosted HAProxy application load balancer's health checks only support HTTP/1.1, we designed a dual-port architecture separating customer traffic (port 443, HTTP/2) from health checks (port 8443, HTTP/1.1), then deployed it through staged phases including pilot testing, parallel infrastructure rollout, and seamless cutover across our four-server environment.

Note: Domain names have been anonymised for this article. All references to

login.companyabc.comare used as examples and do not reflect actual production domain names.

The Problem

We enabled HTTP/2 on our Apache web servers for login.companyabc.com to improve performance. When we configured Apache with Protocols h2 http/1.1 on port 443, our load balancer's health checks immediately failed. All servers were marked as DOWN, causing a complete service outage.

Root Cause: HAProxy (our vendor-hosted application load balancer) health check process only supports HTTP/1.1. When it tried to communicate with HTTP/2-enabled ports, the protocol mismatch caused all health checks to fail with "invalid response" errors.

Our pre-production environment (hosted on Vultr without a load balancer) worked perfectly with the same Apache HTTP/2 configuration. This confirmed the issue was specific to the load balancer health check mechanism.

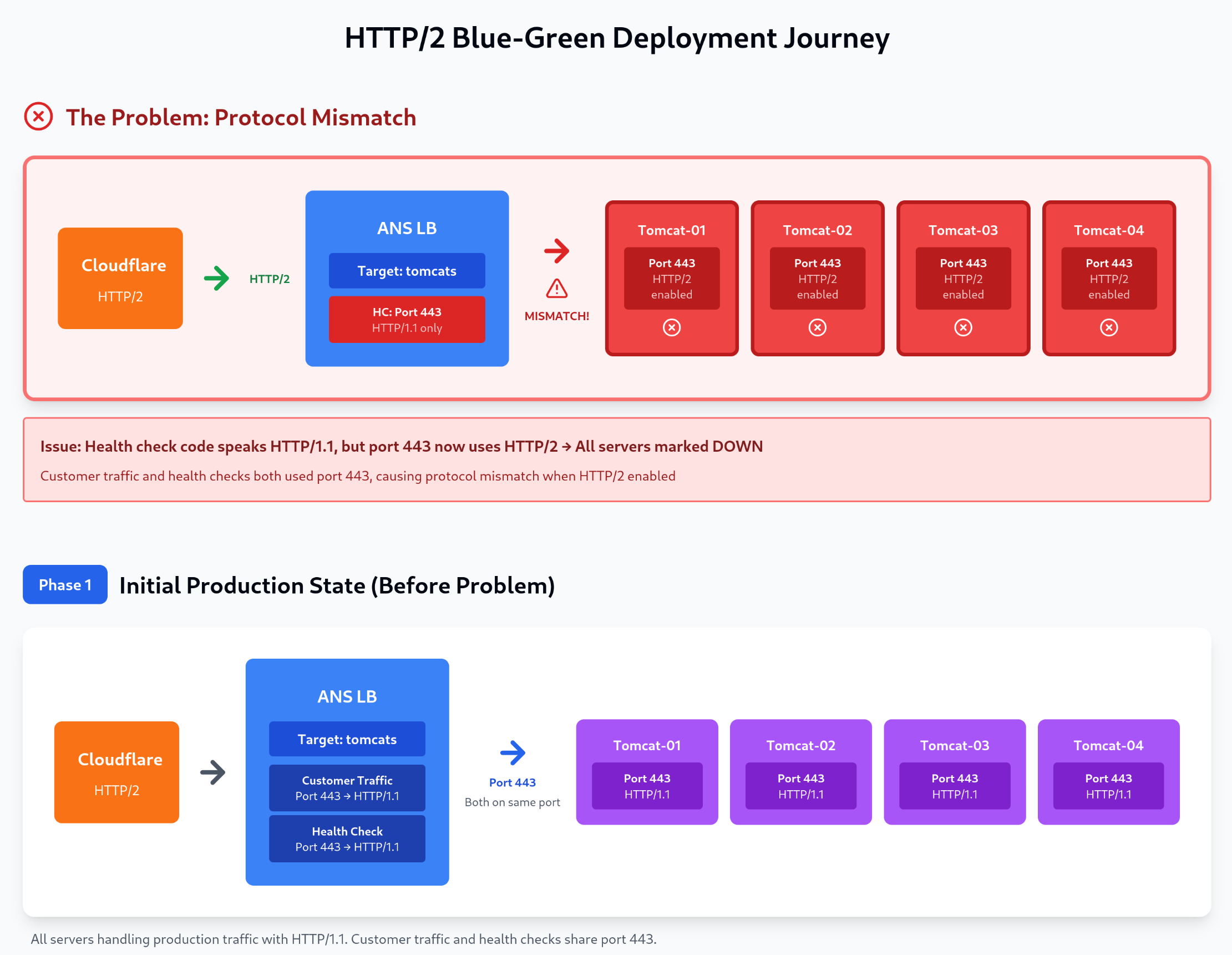

Deployment Journey Overview

The following diagram illustrates our complete deployment journey from the initial problem through to the final HTTP/2-enabled state. Each phase shows the traffic flow from Cloudflare through the vendor Load Balancer to our four Tomcat backend servers. The diagram clearly shows customer traffic and health check paths, ports, and protocols at each stage.

Figure 1: Complete HTTP/2 deployment progression showing seven phases: The Problem (protocol mismatch causing outage), Phase 1 (initial HTTP/1.1 state), Phase 2 (Tomcat-01 isolated in DRAIN mode), Phase 3 (dual-port configuration tested), Phase 4 (parallel infrastructure rolled out), Phase 5 (seamless listener cutover), and Phase 6 (HTTP/2 enabled with dedicated health check port). The diagram clearly distinguishes between customer traffic (port 443) and health check traffic (port 8443), showing how the solution separates these concerns to prevent protocol mismatch.

The Solution: Dual-Port Architecture

In partnership with our vendor technical team, we designed a workaround that separates customer traffic from health check infrastructure. We created two dedicated VirtualHosts:

- Port 443: Customer traffic (eventually HTTP/2-enabled)

- Port 8443: Dedicated HTTP/1.1-only health checks with a lightweight

/healthcheckendpoint

This approach allows the load balancer to monitor server health using HTTP/1.1 while enabling HTTP/2 for actual user traffic.

Implementation Strategy: Blue-Green Deployment

We adopted a parallel infrastructure approach instead of a risky in-place upgrade:

- Build new infrastructure alongside existing production

- Validate thoroughly while production continues unchanged

- Perform seamless cutover with zero downtime

- Enable HTTP/2 as a separate, final enhancement

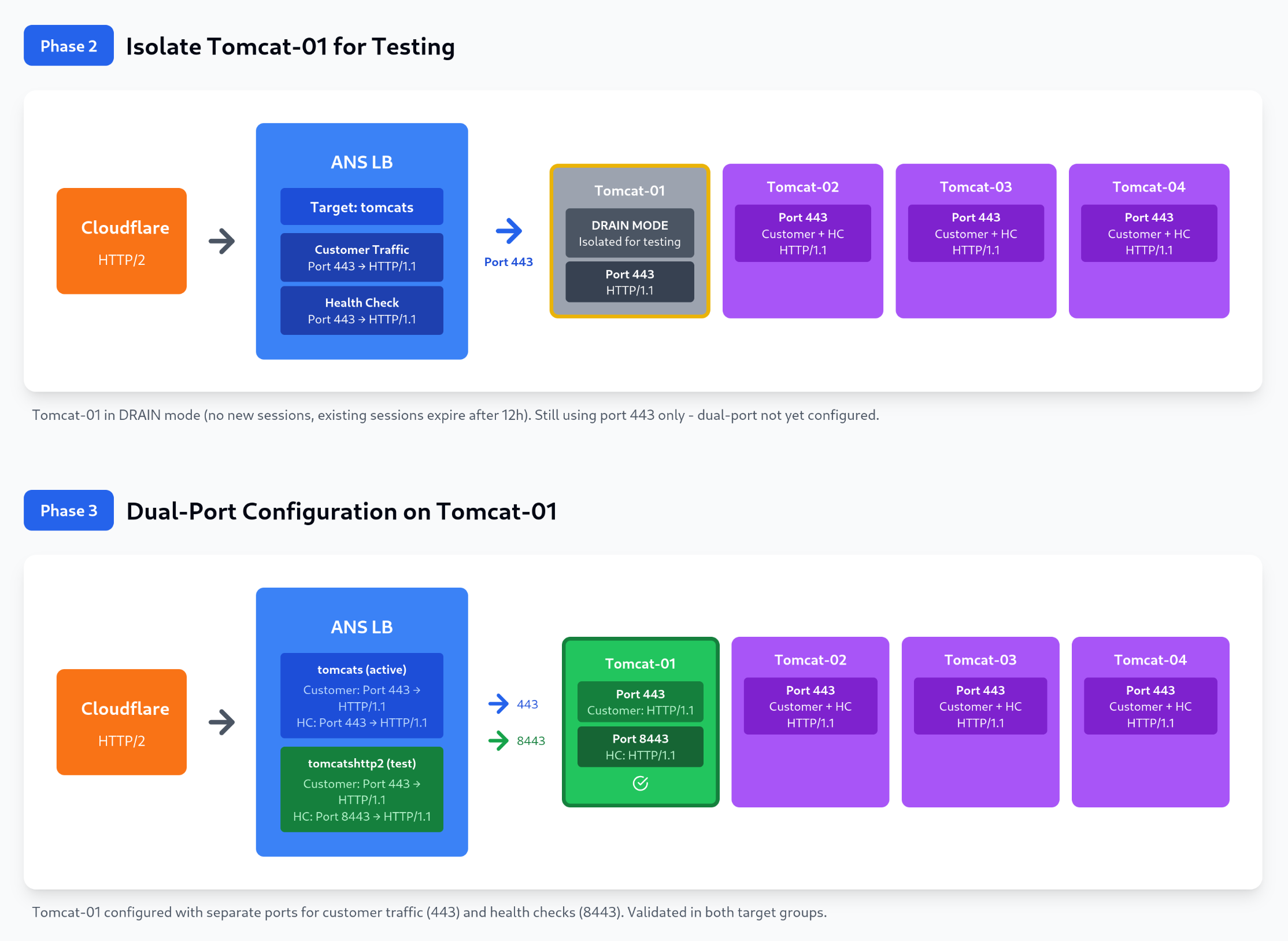

Phase 1: Pilot Testing on Drained Server

Understanding "DRAIN Mode":

When a Tomcat server is set to DRAIN mode in the load balancer:

- No new user sessions are created on that server

- Existing sessions continue until they expire (12-hour timeout in our configuration)

- The server becomes isolated from production traffic, making it safe for testing

- Health checks continue to monitor the server

Why We Didn't Enable HTTP/2 Initially:

We kept HTTP/1.1 on both ports during pilot testing to:

- Validate the dual-VirtualHost infrastructure works correctly

- Prove the server can exist in both target groups simultaneously

- Eliminate variables during testing (infrastructure change only, no protocol change)

- Ensure easy rollback if issues arose

This staged approach let us validate the health check solution before introducing HTTP/2 protocol negotiation.

Test Configuration on Tomcat-01:

We created two dedicated VirtualHosts with identical application logic but different purposes:

1# Port listening configuration

2Listen 443 # Customer traffic

3Listen 8443 # Health checks

4

5# HTTP to HTTPS redirect

6<VirtualHost *:80>

7 ServerName login.companyabc.com

8 Redirect / https://login.companyabc.com/

9</VirtualHost>

10

11# VirtualHost 1: Customer Traffic Port (HTTP/1.1 for testing)

12<VirtualHost _default_:443>

13 ServerName login.companyabc.com

14 Protocols http/1.1 # Deliberately kept as HTTP/1.1 for validation

15

16 Include common-conf.d/ssl-vhost.conf

17 Include common-conf.d/gzip.conf

18

19 # Security headers and SSL configuration

20 RequestHeader set X-Forwarded-Proto "https"

21 Header edit Location ^http:// https://

22 Header always set X-Frame-Options SAMEORIGIN

23 Header always set X-Content-Type-Options nosniff

24

25 ProxyRequests off

26 ProxyPreserveHost on

27 DocumentRoot "/var/www/html"

28

29 # Application routing

30 RedirectMatch "^/(?!web/|admin/|custom_error_pages/).*$" /web/

31

32 # Proxy to Tomcat application

33 ProxyPass /web/ http://127.0.0.1:8080/web/ timeout=600

34 ProxyPassReverse /web/ http://127.0.0.1:8080/web/

35 ProxyPass /admin/ http://127.0.0.1:8080/admin/

36 ProxyPassReverse /admin/ http://127.0.0.1:8080/admin/

37</VirtualHost>

38

39# VirtualHost 2: Dedicated Health Check Port (HTTP/1.1 only)

40<VirtualHost _default_:8443>

41 ServerName login.companyabc.com

42 Protocols http/1.1 # Must remain HTTP/1.1 for health checks

43

44 Include common-conf.d/ssl-vhost.conf

45 Include common-conf.d/gzip.conf

46

47 # Security headers

48 RequestHeader set X-Forwarded-Proto "https"

49 Header edit Location ^http:// https://

50 Header always set X-Frame-Options SAMEORIGIN

51 Header always set X-Content-Type-Options nosniff

52

53 ProxyRequests off

54 ProxyPreserveHost on

55 DocumentRoot "/var/www/html"

56

57 # Lightweight health check endpoint - bypasses Tomcat

58 <Location "/healthcheck">

59 ProxyPass ! # Don't proxy to Tomcat

60 SetHandler none # Serve directly from Apache

61 Require all granted # Allow access

62 </Location>

63

64 # Standard application proxying

65 ProxyPass /web/ http://127.0.0.1:8080/web/ timeout=600

66 ProxyPassReverse /web/ http://127.0.0.1:8080/web/

67 ProxyPass /admin/ http://127.0.0.1:8080/admin/

68 ProxyPassReverse /admin/ http://127.0.0.1:8080/admin/

69</VirtualHost>

Health Check Endpoint Setup:

1# Create health check directory and response page

2mkdir -p /var/www/html/healthcheck

3echo "OK" > /var/www/html/healthcheck/index.html

Graceful Configuration Reload:

1httpd -t # Validate syntax

2systemctl reload httpd # Graceful reload - no connection drops

Our vendor technical team created a new target group tomcatshttp2 with health checks pointing to GET login.companyabc.com:8443/healthcheck/. We validated that Tomcat-01 passed health checks in both the old (tomcats) and new (tomcatshttp2) target groups simultaneously.

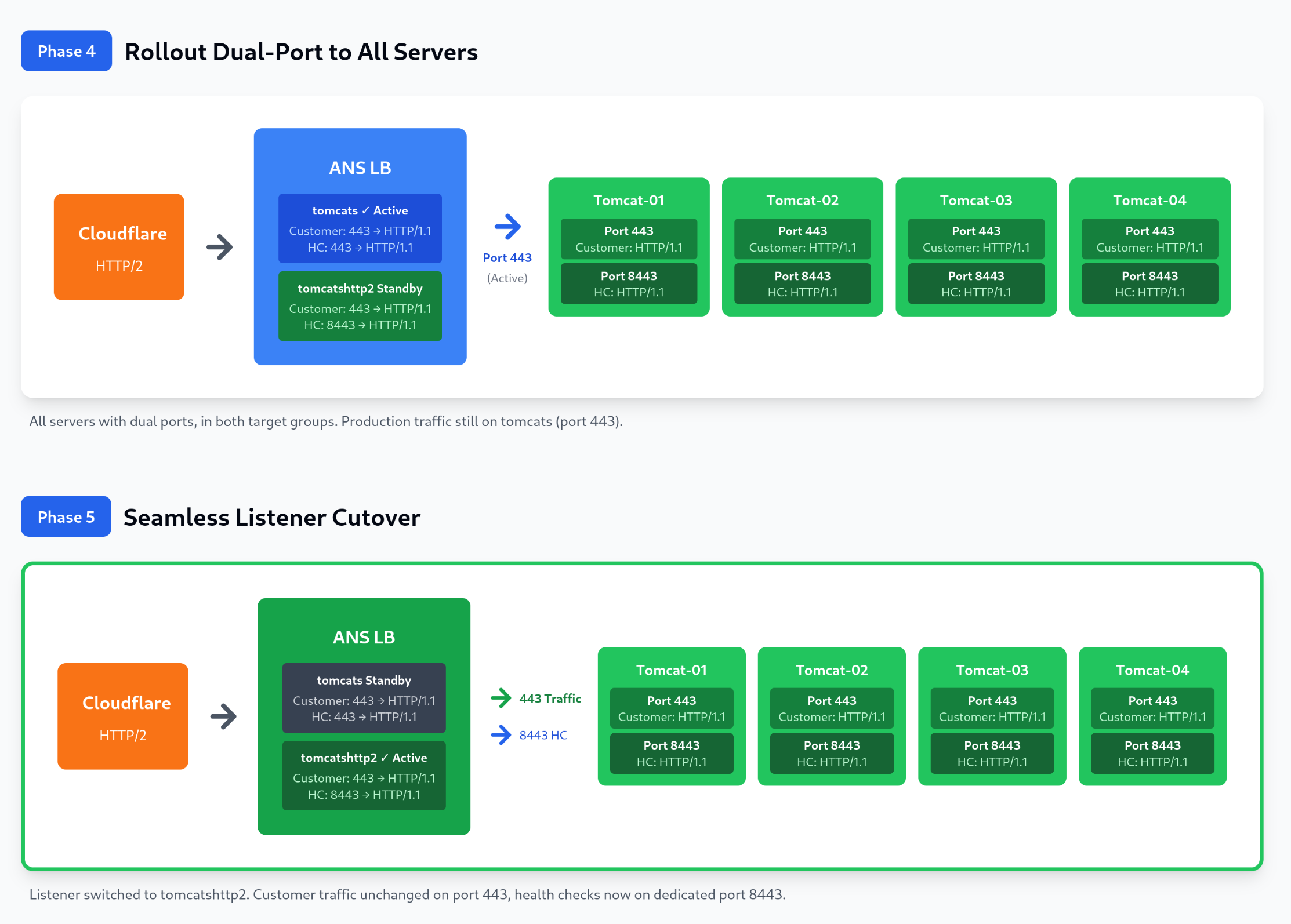

Phase 2: Production Rollout

After successful pilot testing, we rolled out the same configuration to production servers (Tomcat-02, 03, 04):

Rollout Process:

- Applied identical dual-VirtualHost configuration to each server

- Created

/healthcheckdirectory and endpoint on each server - Used

systemctl reload httpdfor graceful, zero-downtime updates - Our vendor team added each server to

tomcatshttp2target group after completion - Verified health check status for each server before proceeding to the next

Infrastructure State After Rollout:

- Target Group

tomcats: All servers, health checks via port 443/web/login, handling all customer traffic - Target Group

tomcatshttp2: All servers, health checks via port 8443/healthcheck, monitoring but not serving traffic

This parallel infrastructure allowed us to validate the new health check system while production traffic continued unaffected.

Phase 3: The Seamless Cutover

As shown in Phase 5 of the deployment diagram, the listener cutover was the critical moment where we switched from the old infrastructure to the new.

Load Balancer Configuration Change:

In collaboration with our vendor, we switched the listener's default target group from tomcats to tomcatshttp2. This was a single configuration change in the load balancer UI that took approximately 30 seconds.

Why Zero Downtime:

- Same servers in both target groups

- Same port 443 configuration (HTTP/1.1)

- Same application responses

- Existing connections continued normally

- New connections immediately routed to new target group

- Only operational change: health checks switched from port 443 to port 8443

Session Stickiness Note: The cutover reset session cookies (different between target groups), potentially logging out active users. We performed this change during a low-traffic period to minimize impact.

Monitoring During Cutover:

1# Watched Apache logs on all servers

2tail -f /var/log/httpd/access_log

3

4# Customer traffic continued on port 443:

510.0.0.7 - - [29/Sep/2025:11:15:33 +0100] "GET /web/login HTTP/1.1" 200 3507

6

7# Health checks now on dedicated port 8443:

810.0.0.7 - - [29/Sep/2025:11:15:35 +0100] "GET /healthcheck/ HTTP/1.1" 200 88

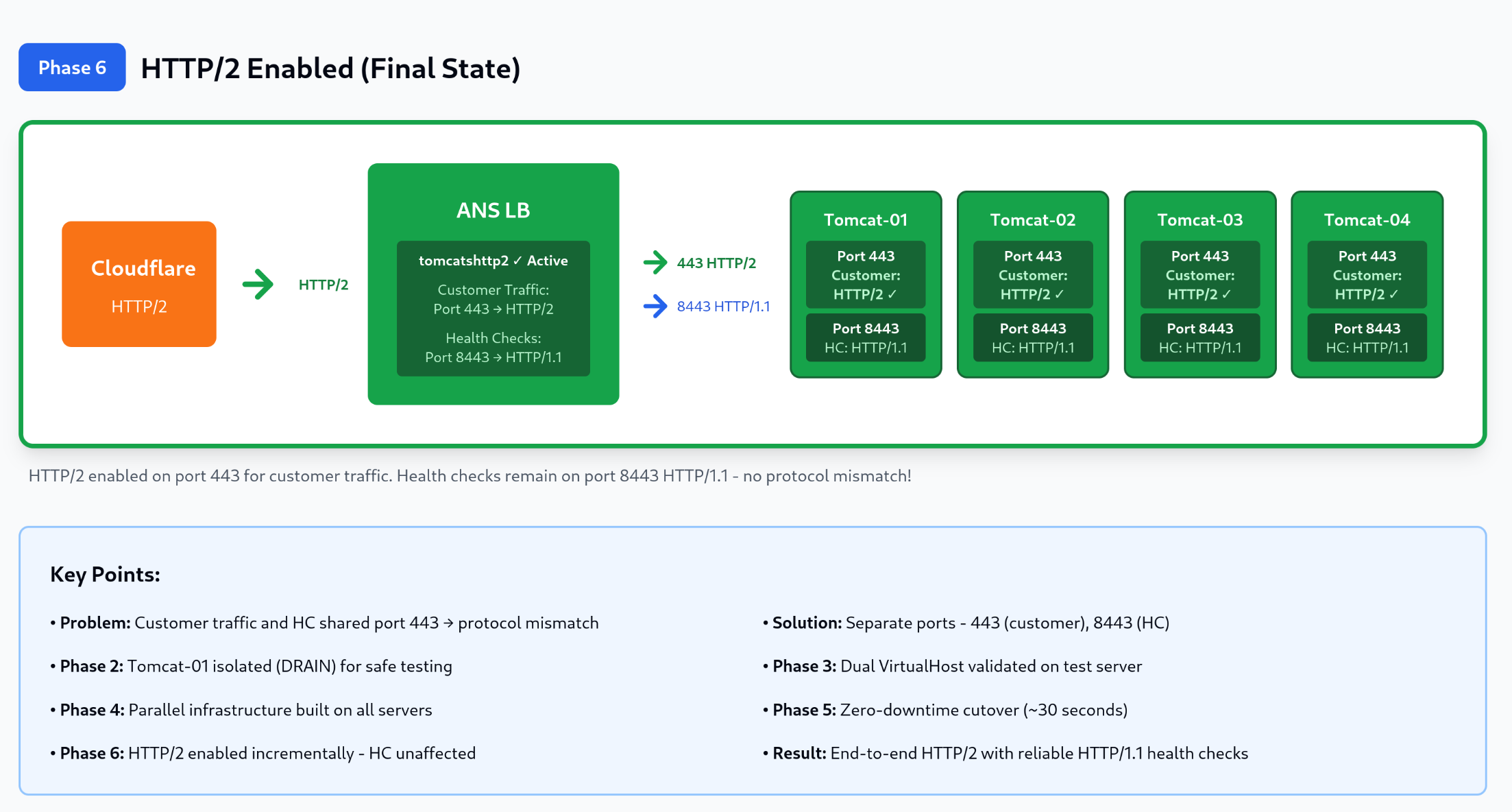

Phase 4: HTTP/2 Enablement

With stable infrastructure and proven health checks, we enabled HTTP/2 on customer-facing port 443.

Final Production Configuration:

1Listen 443

2Listen 8443

3

4# VirtualHost 1: Customer Traffic Port - HTTP/2 ENABLED

5<VirtualHost _default_:443>

6 ServerName login.companyabc.com

7 Protocols h2 http/1.1 # HTTP/2 enabled! Falls back to HTTP/1.1 for older clients

8

9 Include common-conf.d/ssl-vhost.conf

10 Include common-conf.d/gzip.conf

11

12 # Security headers

13 RequestHeader set X-Forwarded-Proto "https"

14 Header edit Location ^http:// https://

15 Header always set X-Frame-Options SAMEORIGIN

16 Header always set X-Content-Type-Options nosniff

17

18 ProxyRequests off

19 ProxyPreserveHost on

20 DocumentRoot "/var/www/html"

21

22 RedirectMatch "^/(?!web/|admin/|custom_error_pages/).*$" /web/

23

24 ProxyPass /web/ http://127.0.0.1:8080/web/ timeout=600

25 ProxyPassReverse /web/ http://127.0.0.1:8080/web/

26 ProxyPass /admin/ http://127.0.0.1:8080/admin/

27 ProxyPassReverse /admin/ http://127.0.0.1:8080/admin/

28</VirtualHost>

29

30# VirtualHost 2: Health Check Port - REMAINS HTTP/1.1

31<VirtualHost _default_:8443>

32 ServerName login.companyabc.com

33 Protocols http/1.1 # Must stay HTTP/1.1 for health checks

34

35 Include common-conf.d/ssl-vhost.conf

36 Include common-conf.d/gzip.conf

37

38 RequestHeader set X-Forwarded-Proto "https"

39 Header edit Location ^http:// https://

40 Header always set X-Frame-Options SAMEORIGIN

41 Header always set X-Content-Type-Options nosniff

42

43 ProxyRequests off

44 ProxyPreserveHost on

45 DocumentRoot "/var/www/html"

46

47 # Lightweight health check endpoint

48 <Location "/healthcheck">

49 ProxyPass !

50 SetHandler none

51 Require all granted

52 </Location>

53

54 ProxyPass /web/ http://127.0.0.1:8080/web/ timeout=600

55 ProxyPassReverse /web/ http://127.0.0.1:8080/web/

56 ProxyPass /admin/ http://127.0.0.1:8080/admin/

57 ProxyPassReverse /admin/ http://127.0.0.1:8080/admin/

58</VirtualHost>

We enabled HTTP/2 on port 443 one server at a time using graceful Apache reloads. We validated each change before proceeding to the next server.

Verification:

1# Apache access logs showing HTTP/2 for customer traffic:

210.0.0.7 - - [29/Sep/2025:11:33:41 +0100] "GET /web/login HTTP/2.0" 200 3507

3

4# Health checks still using HTTP/1.1 on dedicated port:

510.0.0.7 - - [29/Sep/2025:11:33:42 +0100] "GET /healthcheck/ HTTP/1.1" 200 88

Results and Key Takeaways

Achievements:

- Zero downtime throughout entire implementation

- HTTP/2 successfully enabled for all customer traffic

- Improved health check reliability with dedicated, lightweight endpoint

- Clear separation of concerns (customer traffic vs. operational monitoring)

Success Factors:

- Pilot testing on drained server reduced risk before production rollout

- Parallel infrastructure allowed thorough validation without affecting production

- Staged approach separated infrastructure changes from protocol changes

- Graceful Apache reloads eliminated service interruptions

- Dedicated VirtualHosts provided clear separation between customer traffic and health checks

Post-Implementation:

- Kept legacy

tomcatstarget group for one week as rollback safety net - Restricted DMZ network policy to allow only TCP/8443 for health checks (security best practice)

- Load balancer OS upgrade postponed until HTTP/2 implementation proven stable

Conclusion

This project demonstrates that complex infrastructure upgrades can be executed without service disruption when approached methodically. By separating port 8443 for HTTP/1.1 health checks from port 443 for HTTP/2 customer traffic, we resolved the protocol mismatch between HAProxy's limitations and modern web protocols.

The blue-green deployment strategy proved essential: pilot testing validated our approach, parallel infrastructure enabled risk-free testing, and staged rollout minimized complexity. The result was seamless transition achieving performance goals while maintaining operational reliability.

Key takeaway: Separating infrastructure changes from feature enhancements reduces risk and creates more maintainable architecture. This dual-port approach not only solved our immediate problem but established a robust foundation for future improvements.